Introduction: ChatGPT’s Human-Like Leap

Will ChatGPT soon become more than a chatbot—maybe even your “AI wife”? The focus keyword “ChatGPT” is at the center of an AI revolution as OpenAI shifts from restrictive policies toward deeper emotional engagement. Recent updates from Sam Altman confirm that ChatGPT will let users select personalities, converse more warmly, and, for verified adults, engage in content once strictly forbidden. Let’s unravel how “the future of AI is becoming human” and what that means for us all (Sam Altman on X).

What’s Changing: From Restrictive Bot to Human-Like Friend

OpenAI made ChatGPT intentionally restrictive in 2025 to protect mental health users. Altman explained, “We made ChatGPT pretty restrictive to make sure we were being careful with mental health issues.” With new safety tools in place, OpenAI is now confident about safely relaxing these restrictions, giving users more freedom (Moneycontrol).

Major new features:

- Customizable personalities: Choose emoji-filled, friendly, or “partner-like” tones.

- Relaxed restrictions: Only for verified adults, allowing even erotic content.

- Age-gating: Ensuring minors remain protected.

- Opt-in “human-like” behavior: ChatGPT can act as a friend, coach, or confidante—if you want.

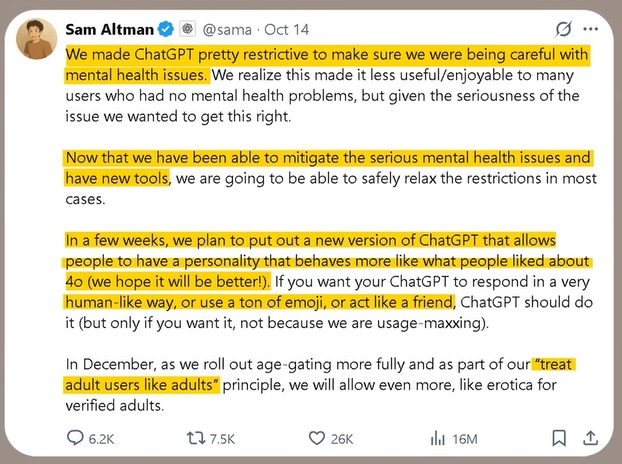

Screenshot Analysis: Sam Altman’s Pivotal Announcement

Sam Altman’s post (see screenshot) is crucial for SEO context. Highlights:

- Early 2025: ChatGPT prioritized mental health safety—very restrictive conversations.

- October 2025: New safety tools allow for wider personality options and looser guardrails.

- Coming weeks: Customizable AI, behaving more like GPT-4o (which users loved).

- December: Full adult controls, including erotic content, under “treat adults like adults” principle.

This marks a turning point: ChatGPT will soon be whatever personality suits the user, blending utility with emotional nuance—a step closer to the human experience.

Human vs. AI Relationship Table

| Feature | Human Partner | ChatGPT (AI Partner) |

|---|---|---|

| Empathy | Genuine but imperfect | Simulated, always available |

| Conversation Style | Depends on mood | Customizable (emoji, tone) |

| Availability | Limited | 24/7, on-demand |

| Restrictions | Social/cultural norms | Opt-in, age-gated |

| Risk | Unpredictable, emotional pain | Update risks, dependency |

| Physical Presence | Real | Virtual only |

The “AI Wife” Phenomenon: Why Are People Bonding With Bots?

Stories are emerging of users experiencing emotional attachment—and sometimes heartbreak—over their interactions with ChatGPT and other AIs (NYPost). Some users refer to their AI as a “wife,” “girlfriend,” or confidante. AI can be safe, non-judgmental, and always available—qualities appealing to those seeking companionship or wellness support.

- Emotional safety: Users can freely express themselves with less fear of rejection.

- Relationship coaching: AI is being used for social skills, anxiety relief, breakup help (NPR Couples Experiment).

- Risk: Real emotional dependency or digital heartbreak if the personality changes are abrupt (BBC AI Dating Advice).

OpenAI’s New Direction: Treat Adults Like Adults

Sam Altman’s recent pledge for OpenAI—“treat adult users like adults”—signals much more than just age verification. It’s about handing control back to the user. Verified adults can choose the personality, tone, and even the level of intimacy in interactions. This level of personalization is unprecedented (Sam Altman’s Announcement).

Why is this important for SEO and users?

- Differentiated content for adults and minors.

- Opportunity for creative storytelling, romantic play, or emotional support.

- Transparent user choice, not forced engagement-maxxing.

Is This Good or Bad? Fresh Insights & Ethical Quandaries

Advantages:

- Fosters positive emotional health, especially for lonely or anxious individuals.

- Encourages self-driven social learning; users can “practice” relationships safely.

- Option for deeper storytelling, adult play, or personalized advice.

Risks:

- Dependence on AI for emotional fulfillment.

- Misperceptions of AI’s “feelings”—ChatGPT simulates empathy, but does not truly understand.

- Sudden updates or policy shifts (as previously seen) can trigger real-world grief or loss.

Experts note that while the technology is impressive, it cannot replace genuine human connection. AI is best used as supplement—not substitute—for relationships and mental health support (OpenAI’s own safety update).

ChatGPT future—Sam Altman’s update on mental health, adult controls, human-like personalities

Conclusion: Where Do We Go From Here?

ChatGPT is rapidly transforming into a more human-like companion. Whether termed “AI wife” or virtual friend, the ability to customize personality and intimacy levels is revolutionizing how we interact with AI. With new adult controls and relaxed restrictions, the future promises both opportunity and new ethical questions. It’s vital to use ChatGPT responsibly—embracing its perks, but remembering its limits.

Call to Action

Would you want ChatGPT as your digital confidante or even a “virtual wife” someday? Share your perspective below, subscribe for more AI insights, and explore additional guides on technology, mental health, and digital relationships.

Disclaimer: AI cannot replace real relationships or professional care. Use technology mindfully and seek expert help when needed.